Hardware Acceleration (HWA) can be described as using computer hardware to perform certain operations faster than by using software on general CPU. Over the past few years, Millennium IT has pioneered a hybrid hardware acceleration system, in order to reduce transaction time on their capital market software. We were able to catch up with Thayaparan Sripavan, who is the Lead – Hardware Accelerated Systems at Millennium IT, to learn more on this.

Let’s start with the beginning of this journey…

” We started exploring hardware acceleration around 2007 and that’s the time when everybody else also started doing the same. Algorithmic trading and broker side systems were gaining so much of traction because of Field Programmable Gate Arrays (FPGA) and General Purpose Graphics Processing Units (GPGPU) that gained relevance in the financial sector. In the year 2009, we formed a team to explore where this could lead us; that’s how we started off. ”

“And then, being a part of the London Stock Exchange (LSE) group, our parent company was keen on becoming early adopters of hardware acceleration. We started working on a ‘Group Ticker Plant’ project which embodied hardware acceleration. That happened to be a successful proof of concept of taking new technology from conceptualization to production deployment. We are now exploring various avenues in this field. ”

In simple terms, how does this platform provide an advantage?

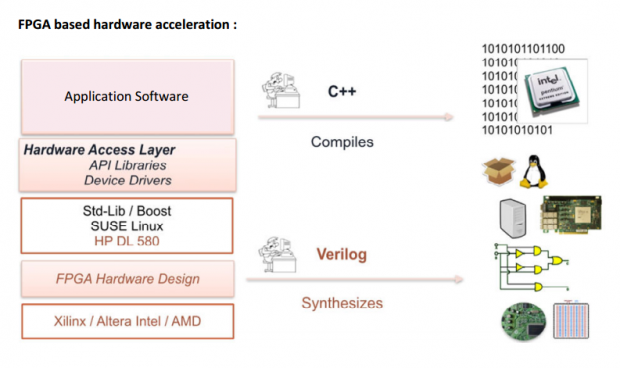

” FPGA based hardware acceleration platform per se doesn’t give any breakthroughs. It gives broader scope to design systems the way we want. CPUs traditionally were optimized to process sequential tasks. FPGAs, on the contrary, provide massively fine-grained parallelism. So every problem that’s solved by software cannot be significantly enhanced by hardware. However, if you have a problem that has inherent parallelism, such that various computes can be done without reference to other pieces of information, then that naturally lends itself for hardware acceleration. ”

” So in the financial world, the benchmark happens to be on compute intensive and less data intensive applications like Monte Carlo simulations and various pricing simulations. Generally, any form of compute intensive applications can be easily done on FPGAs.”

” FPGAs give complete control of digital hardware, where there is no sharing of resources. Therefore responsive systems like medical equipment, signal instrumentation systems and mission critical control systems are traditionally done on FPGA. I think hybrid systems are the least popular of the lot, up until quite recently. Thanks to the manufacturing technology, we can pack more transistors into the same die, which continues to accommodate more and more complex functionality. ”

Where would you fit hardware acceleration in the high performance computing?

“HPC is a very vast area. At MillenniumIT, we give solutions based on our products. Some parts of those solutions can be on hardware and some parts being on software. We would try to mix and match between hardware acceleration and software in the most optimal manner in order to satisfy the requirements of High Performance Computing (HPC) for financial applications. Today it’s centered around 3 major values ”

1. Speed

2. Capacity

3. Determinism.

” Speed refers to the minimum time taken for processing/transmission. Capacity refers to the amount of information that can be processed and transmitted in a given time period. Determinism refers to the certainty of the level of performance under different operating circumstances”

Is it fair to say that Millennium IT is a HFC solutions provider?

” Most of our high profile clients fall into that category. I would say that is one of our strong holds. We have small to medium sized markets solutions as well.”

How well the 2007’s initiatives have paid off?

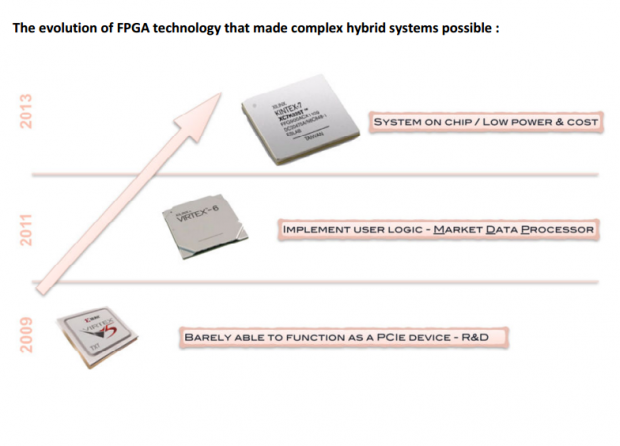

” I think the years 2007 and 2009 are more significant in the technology landscape where highly dense FPGA chips came to the market. The shift in their application from consumer electronics to hybrid systems started around this period of time. . The year 2009 is a year of significance because that’s when FPGA mounted PCIe cards came to the market, enabling tight integration with software. We were lucky to explore our options at the right time when this shift happened. We have targeted at least a 10x gain in performance as a proposition to step into hardware acceleration. It was a big change in technology for us as well as to our clients. So, we had to make sure that the benefit is worth the pain. That really paid off when we had our first product out in the market.”

What are the technical challenges encountered?

” We had to face a challenge that was very specific to this solution. FPGA or any hardware development lifecycle is normally lengthy. It always starts with clear requirements and methodical development, which leads to testing and certification. But to adopt FPGA into a hybrid system and to make it co-exist with software in an environment where there are constant changes, we had to balance the best practices of hardware development with agile software development processes. There is no way that one process could exclusively displace the other; they had to coexist. We had to maintain the quality standards required by tier-1 stock exchanges whilst being accommodative to changes in the requirements. . That class of problem was very specific to us. ”

Where is your roadmap heading for HWA?

” In the last 2 to 3 years, there has been a lot of development happening around FPGA and GPGPUs. Today we have FPGA chip providers talking of higher logic densities and System-On-Chip platforms. That being said, major computer manufacturers like IBM and Intel have also taken certain steps. IBM is proudly promoting the Coherent Accelerator Processor Interface (CAPI) for tight integration between CPU and FPGAs. Intel is talking about their next generation processors having FPGA in the same die that could be in close proximity to the CPU acting as a co-processor. So technology seems to be evolving in the direction to enable us to do hybrid functionality more easilyIt looks like we will have a good unified development eco system in the future to do methodical development of tightly integrated hybrid solutions ”

What career opportunities are there for graduates?

“Hybrid system design spans across different areas. Today we have Engineers who design and implement application specific digital systems .Verification and validation of digital systems are done based on standard methodologies like the Universal Verification Methodology. To bridge a piece of custom hardware and software, different Operating System specific device drivers need to be put in place. So we have people who work on FPGA design, verification , Operating Systems, Device Drivers and application software to make that integration happen. ”

There are people who like software and hardware, this would be suitable?

” Definitely! you always have that breed of people in software who like assembly language. Likewise, those who like to be close to the bare metals of computer architecture are the people who would be excited in working with this stuff.”

Has any of your competitors use this technology?

” Some of them do claim to have hardware acceleration. However, I have rarely come across anyone at this juncture, offering a hybrid solution to the mass market. Most of the giants like the New York Stock Exchange (NYSE) and NASDAQ have their own proprietary technology developed in-house. Others are more focused on selling appliances.”

Final thoughts on the development of this technology at MIT ?

” I think it is more of an overall exploration process for the entire organisation. From where we started to where we are now, successfully getting hybrid systems out to our clients was not simply development work. There was a lot more effort involved in getting from prototype to production, and to support a tier one stock exchange. Most of that is centered around reliability, robustness, availability, spares , debug simplicity, remote trouble shooting and turnaround time. All that needs to be ironed out before a system can creep into a client environment. I think that ride, at times, of course was hard, but in the end it was worth riding.”

Welcome Kalpa to techwire!

Great to have you featured in TW Thaya!

So many cool stuff done by SL engineers! proud of you guys…

Very informative article! Keep up the good work MIT!